Kevin Spencer recreated the classic chair at 1:20 scale with a 3D printer.

h/t fubiz

“[T]he most important thing about language is its capacity for generating imagined communities, building in effect particular solidarities.” Benedict Anderson

I’m at Red Hat Summit this week talking about cloud with customers and partners, and it occurs to me one of the common metaphors isn’t quite right. The problem with the “Assembly Line” metaphor is everyone thinks of 1907 Ford (“any color you want, as long as it’s black”). And that’s actually a lousy example. There was zero flexibility in product output and the only automation beyond individual parts was the well-defined hand-off during assembly. Don’t underestimate the power of those elements, but that’s nothing compared to what we can do today.

The right model is Chevrolet’s model: build knowing the products you need tomorrow are different from the ones you need today. Build knowing you will change your process while it’s still running. It’s no wonder that once implemented, Chevy beat industry-leader Ford to market by a full year while continuing to serve their current customers and took the lion’s share of the entire car market .

If your cloud isn’t open and changeable, your competitors will out innovate you and take your market.

{ photo from excellent slide show on 100 years of assembly lines at Chevrolet and GM: http://www.assemblymag.com/articles/89625-100-years-of-chevrolet-assembly-lines }

{ photo from excellent slide show on 100 years of assembly lines at Chevrolet and GM: http://www.assemblymag.com/articles/89625-100-years-of-chevrolet-assembly-lines }

[ update: corrected link to Red Hat Summit keynote streaming ]

I would like to programmatically generate a report using R. The contents are mostly graphs and tables. I have a working system, but it’s too many pieces. When I hand this off to someone else, it become immediately fragile.

Isn’t there a better way? Here are my elements:

That’s four languages. Ugly.

You already have a physical presence at your customers, why not give them a distributed data center too? German company AoTerra is building OpenStack into their heating systems.

Will the lower hardware and HVAC requirements out weight operating costs born of density in a traditional data center? I can’t wait to find out.

http://gigaom.com/2013/05/24/meet-the-cloud-that-will-keep-you-warm-at-night/

A couple of weeks ago, I announced successfully installing and running R/rpy2 on OpenShift.com

Ok #OpenShift ers, and #Rstats geeks. I have rpy2 running on OpenShift. What's next? http://t.co/Hu781FRFWT

— Erich Morisse (@emorisse) April 24, 2013

Now, you can grab the installation process and bits for yourself* through github.

http://github.com/emorisse/ROpenShift

*I’d prefer (and will be thankful for) commits, hacks, advice, and ideas over code branches.

conditionalEntropy <- function( graph ) {

# graph is a 2 or 3 column dataframe

if (ncol(graph) == 2 ) {

names(graph) <- c("from","to")

graph$weight <- 1

} else if (ncol(graph) == 3)

names(graph) <- c("from","to","weight")

max <- length(rle(paste(graph$from, graph$to))$values)

total <- sum(graph$weight)

entropy <- data.frame(H = 0, Hmax = 0);

entropy$H <- sum(graph$weight/total * log(graph$weight/total) / log(2)) * -1

entropy$Hmax <- log(max * (max-1))/log(2)

return(entropy)

}

After you’ve done all of the hard work in creating the perfect model that fits your data comes the hard part: does it make sense? Have you overly fitted your data? Are the results confirming or surprising? If surprising, is that because there’s a surprise or your model is broken?

After you’ve done all of the hard work in creating the perfect model that fits your data comes the hard part: does it make sense? Have you overly fitted your data? Are the results confirming or surprising? If surprising, is that because there’s a surprise or your model is broken?

Here’s an example: iterating on the same CloudForms data as the past few posts, we have subtle variations on the relationship between CPU and memory usage shown through linear regressions with R. Grey dashed = relationship across all servers/VMs and data points, without any taking into account per server variance; and says generally more CPU usage indicates more memory consumed. Blue dashed = taking into account variance of the intercept but not slope of the variance (using factor() in lm()); and reinforces the CPU/memory relationship, but suggests it’s not as strong as the previous model. The black line varies both slope and intercept by server/VM with lmer().

So what’s the best model? Good question, I’m looking for input. I’d like a model that I can generalize to new VMs, which suggests one of the two less fitted models.

Many thanks to Edwin Lambert who, many years ago, beat into my skull that understanding, not numbers, is the goal.

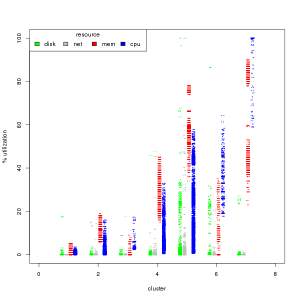

I want to know how to characterize my workloads in the cloud. With that, I should be able to find systems both over-provisioned and resource starved to aid in right-sizing and capacity planning. CloudForms by Red Hat can do these at the system level, which is where you would most likely take any actions, but I want to see if there’s any additional value in understanding at the aggregate level.  We’ll work backwards for the impatient. I found 7 unique workload types by creating clusters of cpu, mem, disk, and network use through k-means of the short-term data from CloudForms (see the RGB/Gray graph nearby). The cluster numbers are arbitrary, but ordered by median cpu usage from least to most.

We’ll work backwards for the impatient. I found 7 unique workload types by creating clusters of cpu, mem, disk, and network use through k-means of the short-term data from CloudForms (see the RGB/Gray graph nearby). The cluster numbers are arbitrary, but ordered by median cpu usage from least to most.

From left to right, rough characterizations of the clusters are:

CloudForms by Red Hat has extensive reporting and predictive analysis built into the product. But what if you already have a reporting engine? Or want to do analysis not already built into the system? This project was created as an example of using Cloud Forms with external reporting tools (our example uses R). Take special care that you can miss context to the data, as there is a lot of state built into the product, and for guaranteed correctness, use the builtin “integrate” functionality.

CloudForms by Red Hat has extensive reporting and predictive analysis built into the product. But what if you already have a reporting engine? Or want to do analysis not already built into the system? This project was created as an example of using Cloud Forms with external reporting tools (our example uses R). Take special care that you can miss context to the data, as there is a lot of state built into the product, and for guaranteed correctness, use the builtin “integrate” functionality.

Both the data collection and the analyses are fast for what they are, but aren’t particularly quick. Be patient: calculating the CPU confidence intervals of 73,000 values across 120 systems took about 90 seconds (elapsed time) on a 2011 laptop.

Required R libraries

forecast

DBI

RPostgreSQL

Installing RPostgreSQL required postgresql-devel rpm on my Fedora 14 box

See: collect.R for example to get started. Full code is available on github.

Notes on confidence intervals

Confidence intervals are the “strength” of likelihood # a value with fall within a given range. The 80% confidence interval is the set of values expected to fall within the range 80% of the time. It is a smaller range than the 95% interval, and should be considered more likely. E.g. if are going to hit your memory threshold within the 80% interval, look to address those limits before those that only fall within the 95% interval.

Notes on frequencies

Frequencies within the functions included are multiples of collected data. Short term metrics are collected at 20 second intervals. Rollup

metrics are 1 hour intervals. Example: for 1 minute intervals with short term metrics, use frequency of 3.

Notes of fields

These are column names from the CF db. The default field is cpu_usage_rate_average. I also recommend looking at mem_usage_absolute_average.

Notes on graphs

Graphs for the systems are shown for the first X systems (up to “max”) with sufficient data to perform the analysis (# of data points > frequency * 2) and that have a range of data, e.g. min < max. Red point = min, blue point = max.

Example images

*.raw.png are generated from the short term metrics. The others from the rollup data.