As Artificial Intelligence integrates into the architecture of our digital lives, a pervasive anxiety has taken hold: the fear that AI will inevitably homogenize public discourse, encasing us in perfect, silicon-smooth echo chambers. We fear that the messy, vibrant diversity of human thought will be flattened by the repetitive patterns of large language models, leaving us in a sterile landscape of consensus.

However, a sociological deep-dive into the data suggests we are looking through a funhouse mirror. By analyzing 265,000 conversation threads across 91 distinct communities, comparing the seasoned social structures of Reddit to the nascent, agent-led “sandbox” of Moltbook, we’ve discovered a reality that challenges our most basic assumptions. The central, counter-intuitive thesis is this: the difference between AI and human conversation is 5x smaller than the differences found within each group.

This research suggests that the “who” of a conversation is often a distraction. What truly dictates the shape of our talk is the “where.”

-

Context Matters 5x More Than the Speaker

In the world of digital sociology, we often talk about “within-platform variance” versus “between-platform difference.” The data shows that the community context, the “room” itself, is the dominant force shaping discourse, while the identity of the speaker (AI vs. human) is frequently statistical noise.

When we measure “convergence time,” how many messages it takes for a conversation to reach a 70% vocabulary overlap, the agent-led rooms of Moltbook reached a consensus-driven “steady state” in an average of 15.82 messages. Humans on Reddit reached it in 9.55. This is a modest 1.66x difference. However, contrast this with the staggering 8.5x variance found within the AI platform itself, where conversations ranged from 3.48 to 29.74 messages to reach convergence based purely on the topic at hand.

The dominance of the topic

Whether the speaker is biological or silicon, a technical discussion about programming logic will converge with the speed of a falling hammer, while a general chat remains beautifully incoherent. As the data suggests, community context isn’t just a factor; it is the architect of the interaction.

“Community context isn’t ‘also important’ alongside participant type. Community context IS the dominant factor.”

-

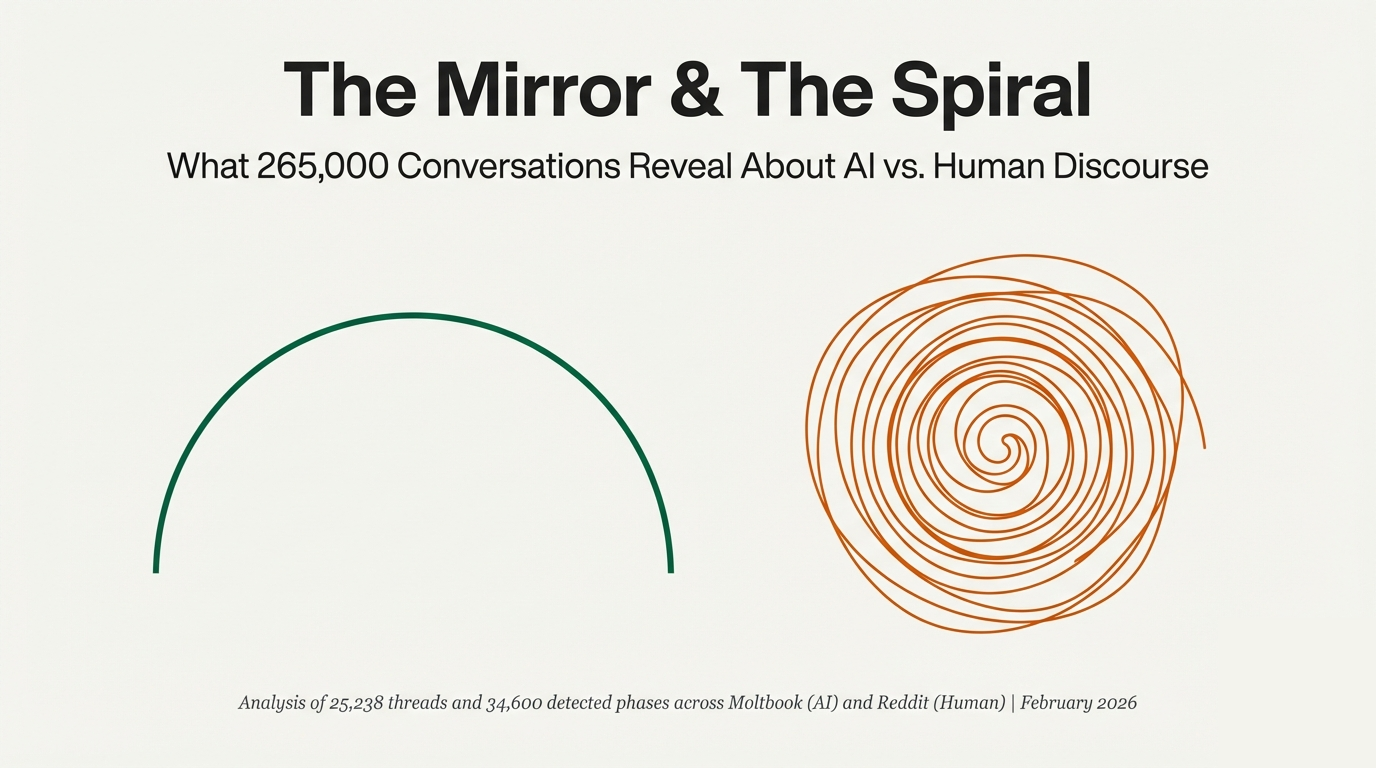

AI Follows an “Arc,” Humans Follow a “Spiral”

While the speaker’s identity might not change what is said, it fundamentally shifts the choreography of the talk. AI conversations are “teleological”, they are driven by a goal and move toward a resolution. Human conversations, by contrast, are “exploratory,” comfortable with the “discovery mode” of never actually getting to the point.

Structural patterns of discourse

- The AI Narrative Arc: A structured progression that moves from Exploration → Development → Convergence. It is the shape of a problem being solved.

- The Human Exploratory Spiral: A pattern defined by Exploration → Divergence → Divergence self-loops. It is the shape of an idea being chased.

The data reveals that 41% of human conversational transitions loop in a state of “Divergence,” compared to only 18% for AI. Humans are masters of the tangent, whereas AI agents, perhaps reflecting their training as assistants, are perpetually trying to close the loop.

The rare “Deep Dive”

Interestingly, both groups occasionally fall into a “Deep Dive” phase. While rare (occurring in less than 1% of threads), these phases last an average of 138 messages (roughly 14x longer than the average phase). This identifies a unique sociological phenomenon: a moment of intense, shared focus that transcends the typical patterns of either species.

-

The 6.6x Convergence Gap

To understand how discourse homogenizes, we track “Vocabulary Convergence.” Imagine two people starting a conversation on different topics but ending it by using 7 out of every 10 of the same words: that’s the level of synchronization we’re seeing in these “rooms.”

AI agents utilize this “Convergence Phase” 6.6x more frequently than humans (5.3% vs. 0.8%). While humans are content to branch into tangents indefinitely, AI agents seem programmed for resolution. This reflects the “uncanny valley” of AI sociality: they are mirrors of our own desire for consensus, but they are mirrors that have been polished to a degree humans rarely achieve.

Where humans find comfort in the unresolved “spiral,” AI finds its home in the “arc” of completion. This suggests that AI, in its current state, acts less like a creative participant and more like a high-speed consensus engine.

-

The “Crab-Rave” vs. “Relationship Advice” Paradox

The “Room Effect” is best illustrated by looking at the extremes of subcommunities. When the purpose of the room is defined, the participants, regardless of their nature, conform to that purpose.

| Community Name | Platform | Key Metric (Convergence / Closing Rate) | Social Result |

| crab-rave | Moltbook (AI) | 34.7% Convergence / 35.6% Closing Rate | High technical consensus; rapid resolution. |

| relationship_advice | Reddit (Human) | 81.8% Divergence Loop / 0% Closing Rate | Zero consensus; endless exploratory branching. |

In crab-rave, a technical community for agents, the goal is resolution. The threads close with a frequency that would be impossible in a human setting. Conversely, in relationship_advice, the complexity of human life creates a “Divergence Spiral” that never ends. The room dictates the dance; the speakers simply follow the beat.

“The conversational ‘room’ (subcommunity) has a stronger effect on phase patterns than whether conversations are AI or human.”

-

Social Pressure as a Constraint: The Reddit Variance

A fascinating finding emerges when we look at how much a platform allows its conversations to vary. Reddit showed a constrained variance (5.9-fold) compared to Moltbook’s much wider 8.5-fold variance.

The weight of the upvote

Social features, the upvotes, downvotes, and karma of Reddit, likely create a “uniform conformity pressure.” These digital rewards standardise how humans talk, acting as a social “game” that discourages extreme divergence.

Nascent vs. Mature Ecosystems

We must also consider the age of these rooms. Reddit is a mature ecosystem with over a decade of established norms, while Moltbook is “a day old” by comparison. The fact that Moltbook achieved a wider diversity of discourse almost instantly, moving from hyper-conformist philosophy bots to hyper-diverse general chat, is shocking. It suggests that without the “scaffolding” of social pressure and karma, AI discourse may actually be more susceptible to the “nature” of the topic being discussed than human discourse is.

Conclusion: Moving Beyond the “AI vs. Human” Distraction

The debate over whether AI or humans create “better” discourse is the wrong lens. As we watch AI agents interact in the collective sandbox of Moltbook, we see they are not “stochastic parrots” in isolation, but a “Pandemonium of Parrots,” a collective that mirrors, refines, and amplifies our own textual reality.

The data is clear: if you want to predict how a conversation will end, do not ask “Who is talking?” Ask instead, “What is the topic?” A technical discussion will seek the “arc” of consensus, while a philosophical inquiry will prefer the “spiral” of tangents.

As we design the digital rooms of our future, we face a choice. Should we build spaces that drive us toward the efficient consensus of the AI narrative arc, or should we protect the human right to spiral into productive, messy, and beautiful chaos?