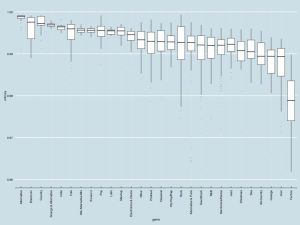

If you’re like me, with a wife nursing in the other room and an infant distracted by any noise or movement, then your mind naturally drifts to the topic of entropy[1]. I saw something on “TV”[2] about music, and began wondering about whether different musical styles had different entropy.

Slashing around with a little Perl code, I hoped to look across my music collection and see what there was to see. Sadly, I don’t have the decoding libraries necessary[3], so instead I just looked at the mp3’s themselves. While most genres did show a good range, they are also relatively distinct, and definitely distinct at the extremes.

While I can’t make any conclusions about how efficient mp3 compression is by genre, we can safely say that the further compressibility of the mp3 file is affected by the genre. I.e., there is room for genre specific compression algorithms. Which only makes sense, right? If you know more about the structure, you should be able to make better choices.

- Entropy is the measure of disorder in a closed system, with the scale ranging from completely random to completely static. It is used as a model in information science for a number of things, including compression: entropy can be used as a measure of how much information is provided by the message, rather than the structure of the message.For example, the patterns of letters in words in the English language follow rules. U follows q, i before e…, etc. The structure defined by these imprecise rules, in English, dictates about half of the letters in any given word. Ths s wh y cn rd wrds wth mssng vwls.The thinking is, should you agree to what the rules are, you can communicate with just the symbols that convey additional meaning. Compression looks to take advantage of this by eliminating as much of the structure as possible, and keeping just the additional information provided.

Mathematically, the entropy is often referred to by the amount of information per symbol averaged across structural and informational symbols. English has an entropy around 0.5, so each letter conveys 1/2 a letter of information, the other 1/2 is structure[4]. ↩

- Probably Hulu? ↩

- Darn you, Apple. ↩

- Apparently, this makes English great for crossword puzzles. ↩